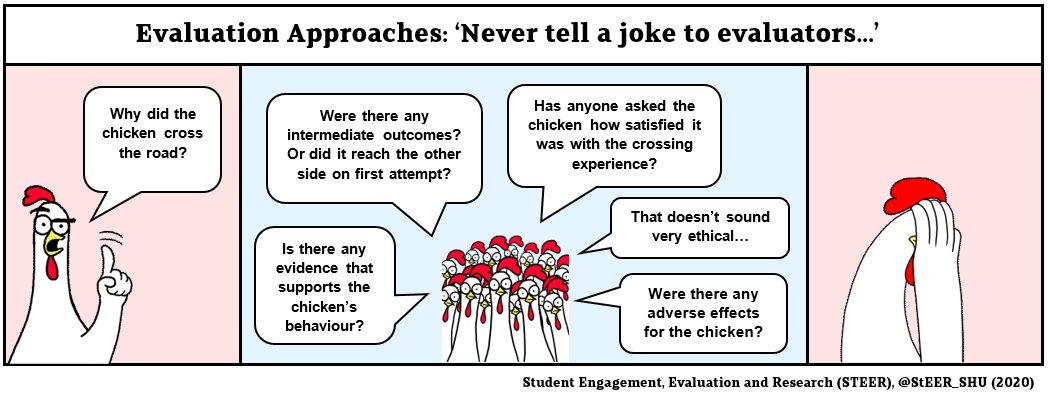

Sheffield Hallam is committed to continuous enhancement and as such, includes a responsibility for building an evaluative mind-set across the institution. This will ensure that all activity and intervention is evaluated (process, impact or economics) and this supports evidence informed decision making.

STEER aims to help staff and students at SHU by:

- Continuing to support staff working on strategic measure programmes with iterative and systematic evidence-informed programme design and implementation.

- Developing training and development sessions for staff to develop capacity and capability concerning methodology and examples of ‘what works’.

- Designing and implementing a new process of ethical approval to allow institutional service evaluations to be shared externally and inform ‘what works’ evidence bases.

- Creating and piloting an evaluation repository to communicate evaluative outputs and raise awareness of evaluative practices.

We work across four key areas within Evaluation & Research

Why is evaluation important?

Designing and evaluating effective practices is a key considerations for Access and Participation work, regulated and monitored by the Office for Students. This has resonance across our whole institution.

There are three types of evaluation evidence that represent the standards outlined by OFS. These are as follows.

- Narrative Evaluation: The impact evaluation provides a narrative or a coherent theory of change to motivate its selection of activities in the context of a coherent strategy.

- Empirical Evaluation: The impact evaluation collects data on impact and reports evidence that those receiving an intervention have better outcomes, though does not establish any direct causal effect.

- Causal Evaluation: The impact evaluation methodology provides evidence of a causal effect of an intervention.

The Office for Students have produced guidance documents for collecting evidence of impact. These relate to Outreach, Access and Participation and Financial Support.

This guidance is based on work by the Centre for Social Mobility, and is also complimented by TASO, which has been created as a national body for supporting evidence and impact.

Additional resources

STEER in collaboration with QAA Scotland: Free webinar resources on optimising the use of evidence in higher education.

David Parsons‘s work ‘Demystifying Evaluation’. Parsons (2017) has identified 5 key steps for effective evaluation research design which STEER promote for all evaluative work:

- Evaluation research is not incremental. It requires clarity of expectations and needs before all else.

- One size does not fit all. Good evaluation research design is always customised to some extent.

- Effective design is not just about methods. It needs to combine technical choices (contextualised, fit for purpose, robust) with political context (so it is understood, credible and practical).

- Method choices are led by a primary dichotomy: consideration of measurement (how much) vs. understanding (how; why). The chosen method can do both, if required.

- Good design is always proportionate to needs, circumstance and resources.

The Scottish Framework for Fair Access has also produced Evaluation Guidance which is a useful provision of evaluation resources aimed at raising awareness of the importance of high quality evaluation.

TASO has been created as a national body for supporting evidence and impact in higher education and also has evaluation guidance and an evidence toolkit.

The UK Evaluation Society also has Good Practice Guidelines which include a focus on ethical evaluation, and self evaluation.