Building an Evaluative Mindset at Hallam #3: Programme (Activity/Intervention) Design

This third blog post in the series covers the use of evidence and evaluation to inform the design of initiatives and the use of theories of change to structure this process. The previous posts in this series have focused on an overview of the approach adopted at Hallam to build an evaluative mindset and the strategic context of evaluation at the institution.

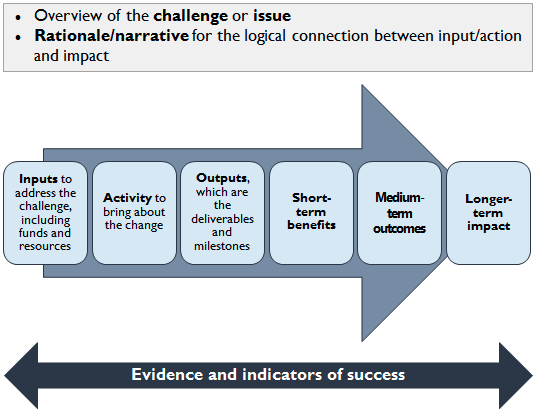

In its simplest format, the aim of a theory of change is to provide evidence of how an initiative will achieve its short, medium and long-term outcomes by considering how individual stages of the evaluation are connected together (Parsons, 2017). The process prompts evaluators to demonstrate: 1) what they are trying to achieve; 2) why an initiative is required to address the issue; 3) the causal links between the activity and the short, medium and long-term outcomes (see Figure 1).

Defining the issue and building a rationale

The first task facing evaluators is to clearly articulate the challenge that they want to address and the reasons why this is necessary. NPC (2019) advocate undertaking a situation analysis, comprised of three stages, to describe the issue, its causes, context and the resources that are available:

- Producing a clear and concise statement about the challenge that the initiative will be seeking to address.

- Unpicking the ‘challenge’ further by considering: who is affected; what are the consequences; what are the causes; what are the barriers to change; where are there gaps in knowledge?

- Thinking about the resources that are available, such as expertise, experiences and connections, and how these can be utilised most effectively.

The design of the evaluation will be considered in more detail in the next blog post but establishing its purpose and identifying stakeholders at this stage will help shape the outcomes:

- The two most common forms of evaluation are process evaluation, which is concerned with the delivery of the activity and its outputs, and impact evaluation, which is focused on measuring the changes that result from those activities (Parsons, 2017). A set of key evaluation questions produced by Better Evaluation is a useful starting point for determining if one of these types or both are relevant for your context.

- Relevant stakeholders who will benefit from the evaluation can have a pivotal role in shaping aims and plans by providing insights and knowledge. Using a stakeholder analysis template can help to identify and differentiate groups by their level of influence and interest (Austin & Jones-Devitt, 2019, p. 50).

- The SMART framework is a simple and effective tool for setting effective and precise objectives (Parsons, 2017). A brief step-by-step guide has been produced by the Economic and Social Research Council.

‘Backwards mapping’: Identifying impact before the initiative

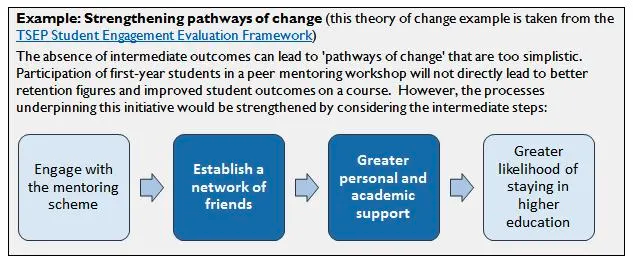

Guidance from the sector advocates that the desired long-term impact should be determined before working backwards to focus on intermediate outcomes and then the makeup of the initiative (NPC, 2019). Establishing a clear long-term outcome ‘helps keep people focused and motivated’ and to build a ‘vision of change that transcends what they can achieve through their own efforts’ (Taplin & Clark, 2012, p. 3). Intermediate outcomes, which precede long-term impact, need to be considered as certain issues can take many years to resolve, especially those that require changes to deeply embedded practices and systems. Best practice indicates:

- The long-term changes that you want to make might be achieved beyond the duration of the initiative, such as years after the activity has occurred.

- Intermediate outcomes are more likely to focus on changes that the initiative is expected to make for those taking part in the weeks and months after the activity has taken place, for example, increases in knowledge and skills and changes of attitudes and behaviour (NPC, 2019).

- Outcomes should be clearly linked to the rationale and describe the difference that the initiative will make, for example, by indicating who and what will change (Evaluation Support Scotland, 2018). These should be shaped collectively with all stakeholder groups in order to reach a consensus and avoid misleading expectations (Parsons, 2017).

- Use the question ‘What preconditions must exist for the long-term outcome to be reached?’ to guide your thinking (Taplin & Clark, 2012).

Using evidence to inform the design of the initiative

At the point of designing the initiative, decisions should be underpinned by evidence of ‘what works’ to show that the intended effect it is anticipated to have is realistic and feasible (Centre for Social Mobility, 2019). Referring to evidence collected previously might be sufficient to demonstrate the links in the theory of change or new evidence can be generated by building in evaluation into the design of the initiative. The process of making sense of this evidence is not simple process as there can be multiple, often conflicting, interpretations:

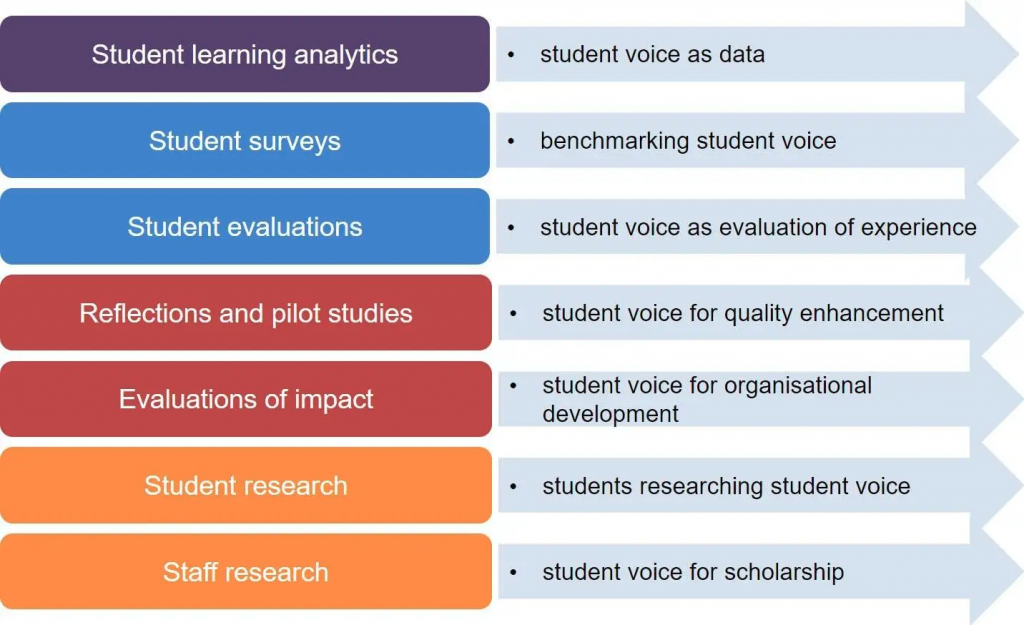

- Examine the results of previous evaluations, research and data that is available locally or nationally (Austen, 2018; Centre for Social Mobility, 2019), for example, the TSEP Student Engagement Evaluation Framework provides a useful table of sources (Thomas, 2017, p. 32).

- Repositories of evidence, such as the sector-wide Evidence and Impact Exchange and a local repository in development by STEER, will promote the communication of evaluative outputs.

- Complete the Critical Checklist for Using Evidence Effectively to evaluate your own practice, which includes guidance on assessing the appropriateness of data sources and the importance of triangulating a range of data, methods and theories (Austen & Jones-Devitt, 2019, p. 52). Additional resources include QAA Scotland’s Evidence for Enhancement webinar series, for example, the need to question our assumptions about evidence.

- Consider the contributions of ‘less traditional’ forms of evidence, such as documentary sources and researcher reflections (Austen & Jones-Devitt, 2019).

- Subject quantitative data to the same level of scrutiny as qualitative data: Statistical figures, which are often presented as being ‘neutral’ are still socially constructed entities that can embody bias (Gillborn, Warmington & Demack, 2018).

Developing indicators: Measuring progress on outcomes and assessing performance

An indicator of success is a measurable value used to show progress towards meeting a specified goal. By assigning each outcome with relevant indicators, the implementation and effectiveness of an initiative can be determined (i.e. what is happening and why). Guidance from across the sector advises evaluators to:

- Set indicators that are specific, measurable and relevant to the planned activity and identified for each part of the evaluation process – the short-term, intermediate and long-term outcomes identified in the preceding stages (Centre for Social Mobility, 2019).

- Write indicators in a neutral stance, such as ‘level of’ and ‘how often’, and avoid using words that indicate change (NCVO, 2018).

- Consider indicators for outcomes that have been used by others within the institution and across the sector as long as they are suitable for your own context, for example: 1) the TSEP Student Engagement Evaluation Framework provides ideas for indicators for outputs, outcomes and impact (Thomas, 2017, p. 29); 2) The Research Leaders Impact Toolkit contains a list of criteria to evaluate impact that includes: reach (e.g. number of individuals who have benefitted from the initiative); significance (e.g. how deeply the impact has been felt); and pathways to impact (e.g. evaluation of the plans for achieving impact).

- Setting indirect or ‘proxy’ indicators for subjective outcomes that cannot be directly measured (NCVO, 2018). Cleaver, Robertson and Smart (2019) have developed a new conceptual model for mapping and evidencing ‘intangible assets’ that positively enhance the student learning experience, for example, sense of belonging and building effective relationships.

Challenging assumptions and unintended consequences

Assumptions are ‘too often unvoiced or presumed, frequently leading to confusion and misunderstanding in the operation and evaluation of the initiative’ (Center for Theory of Change). Evaluators are tasked with interrogating evaluation plans to ensure that plans are credible whilst identifying any aspects that are potentially vulnerable:

- Assumptions should be checked throughout the design of the initiative but it is useful to carry this out again at the end as part of a wider Quality Review process, which judge whether the theory is plausible, feasible and testable (Taplin & Clark, 2012).

- Invite stakeholders to a group meeting to enable perceptions and assumptions to be made explicit and to develop points of consensus (Taplin & Clark, 2012).

- Consider how the process will take into account any unintended consequences of activities, such as the use of key informant interviews and undertaking a risk assessment (Better Evaluation).

Guiding questions for challenging our assumptions (Office for Students, 2019)

As part of guidance produced by the Office for Students, a set of guiding questions were issued to stimulate thinking about underlying assumptions of an initiative. These questions consisted of:

What assumptions underpin each step – each word or concept?

- What else could happen between outcomes?

- What evidence is there to support our assumptions? Are there any areas of weak evidence or gaps?

- Do we understand how and why we expect things to happen that way?

- Are there other dependencies which we have not identified?

- Are we being realistic that the resources/activities are sufficient to achieve the outcomes?

- Do power relationships affect your pathway? Where could you inadvertently cause harm?

- What would the target communities and other stakeholders think or feel?

Institutional case study: Unintended consequences (Jones-Devitt et al., 2017)

The aim of an initiative at Sheffield Hallam in 2016 was to examine whether co-design and peer-learning approaches make any positive differences to the confidence levels and belonging of BAME students. However, at the point of approaching staff to support the project at local-levels, some conversations with staff were grounded in deficit thinking about students’ skills. In light of difficulties in engaging staff and misjudging the ‘readiness’ of the institution, the team revised the focus of the project to awareness raising and confidence building with all staff groups across the institution. This was viewed as a positive, if unintended, outcome.

Building evaluation into the design of the initiative

Evaluation should be in place from the start of activities by agreeing the approach and action plan as part of the overall specification (Centre for Social Mobility, 2019, pdf). The risk of failing to embed evaluation during the design phase is that the data collected is not completely appropriate for the objectives which are set.

Institutional case study: Capturing Data pre, during and post-initiative

The evaluation of a module aimed at developing students’ intercultural competencies and global outlook was made more robust by gathering data throughout the delivery of the initiative. Participants were asked to complete an online questionnaire before and after the activity to measure the distance travelled, rather than relying on capturing feedback only at the end point. Respondents were tracked to allow the magnitude of change to be determined at an individual-level. A text analysis of reflective journals and face-to-face interviews were also used to record the development of students and to triangulate findings.

References and Further Reading

Austen, L. (2018). ‘It ain’t what we do, it’s the way that we do it’ – researching student voices [Blog post]. Retrieved from https://wonkhe.com/blogs/it-aint-what-we-do-its-the-way-that-we-do-it-researching-student-voices/

Austen, L.& Jones-Devitt, S. (2019). Guide to Using Evidence. Retrieved from https://www.enhancementthemes.ac.uk/docs/ethemes/evidence-for-enhancement/guide-to-using-evidence.pdf?sfvrsn=394c981_14

Better Evaluation (n. d.). Specify the Key Evaluation Questions. Retrieved from the Better Evaluation website: https://www.betterevaluation.org/en/rainbow_framework/frame/specify_key_evaluation_questions

Better Evaluation (n. d.). Identify potential unintended results. Retrieved from the Better Evaluation website: https://www.betterevaluation.org/en/rainbow_framework/define/identify_potential_unintended_results

Center for Theory of Change (n. d.). Identifying assumptions. Retrieved from the Center for Theory of Change website: https://www.theoryofchange.org/what-is-theory-of-change/how-does-theory-of-change-work/example/identifying-assumptions/

Centre for Social Mobility (2019). Using standards of evidence to evaluate impact of outreach. Retrieved from https://www.officeforstudents.org.uk/media/f2424bc6-38d5-446c-881e-f4f54b73c2bc/using-standards-of-evidence-to-evaluate-impact-of-outreach.pdf

Economic and Social Research Council. (n. d.). Setting objectives. Retrieved from the ESRC website: https://esrc.ukri.org/research/impact-toolkit/developing-a-communications-and-impact-strategy/step-by-step-guide/setting-objectives/

Evaluation Support Scotland (2018). Setting Outcomes. Retrieved from the Evaluation Support Scotland website: http://www.evaluationsupportscotland.org.uk/media/uploads/resources/ess_sg1a_-_setting_outcomes_(feb_2018).pdf

Gillborn, D., Warmington, P., & Demack, S. (2018). QuantCrit: education, policy,‘Big Data’and principles for a critical race theory of statistics. Race Ethnicity and Education, 21(2), 158-179

Jones-Devitt, S., Austen, L., Chitwood, E., Donnelly, A., Fearn, C., Heaton, C., … & Parkin, H. (2017). Creation and confidence: BME students as academic partners… but where were the staff?. Journal of Educational Innovation Partnership and Change, 3(1), 278-285

Jones-Devitt, S. & Donnelly, A. [Enhancement Themes]. (2018, November 27). Just enough? Why we need to question our assumptions about evidence [Video file]. Retrieved from https://www.youtube.com/watch?v=qzqHSzY2hjA&feature=youtu.be

Leadership Foundation for Higher Education (2017). Research Leaders Impact Toolkit: Evaluating. Retrieved from https://www.lfhe.ac.uk/en/research-resources/publications-hub/index.cfm/RLITEvaluating

NCVO. (2018). How to develop a monitoring and evaluation framework. Retrieved from the NCVO website: https://knowhow.ncvo.org.uk/how-to/how-to-develop-a-monitoring-and-evaluation-framework

NPC (2019). Theory of change in ten steps. Retrieved from: https://www.thinknpc.org/resource-hub/ten-steps/

Parsons, D. (2017). Demystifying evaluation: Practical approaches for researchers and users. Policy Press.

Robertson, A., Cleaver, E., & Smart, F. (2019). Beyond the metrics: identifying, evidencing and enhancing the less tangible assets of higher education. QAA Scotland. Retrieved from https://www.enhancementthemes.ac.uk/current-enhancement-theme/defining-and-capturing-evidence/the-intangibles-beyond-the-metrics

Student Engagement, Evaluation and Research [STEER]. Evaluation. Retrieved from https://blogs.shu.ac.uk/steer/evaluation/

Taplin, D. H., & Clark, H. (2012). Theory of change basics: A primer on theory of change. New York: Actknowledge. Retrieved from http://www.theoryofchange.org/wp-content/uploads/toco_library/pdf/ToCBasics.pdf

Thomas, L. (2017). Evaluating student engagement activity: Report, evaluation framework and guidance. The Student Engagement Partnership. Retrieved from: http://tsep.org.uk/wp-content/uploads/2017/06/Student-Engagement-Evaluation-Framework-and-Report.pdf

close